Been trying to learn TensorFlow by working on the Udacity Deep Learning mooc. All the programming assignments are based on Jupyter Notebooks. Unfortunately, since I setup my computer with a NVIDIA GPU I've been using Virtualenv to mange my Python distributions as recommended in the Tensorflow installation documents. However, I've had a really hard time getting IPython and Jupyter configured so I can access all the packages I needed until I read this.

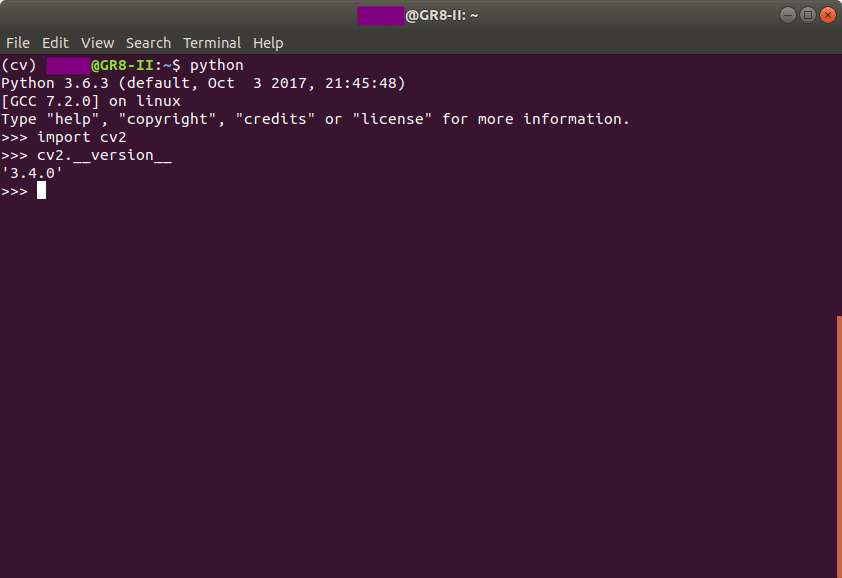

The solution is quite simple. From your tensorflow environment, first install ipykernel. Then you register the kernel with the tensorflow environment.

$ source ~/tensorflow/bin/activate $ pip install ipykernel $ python -m ipykernel install --user --name=tensorflow

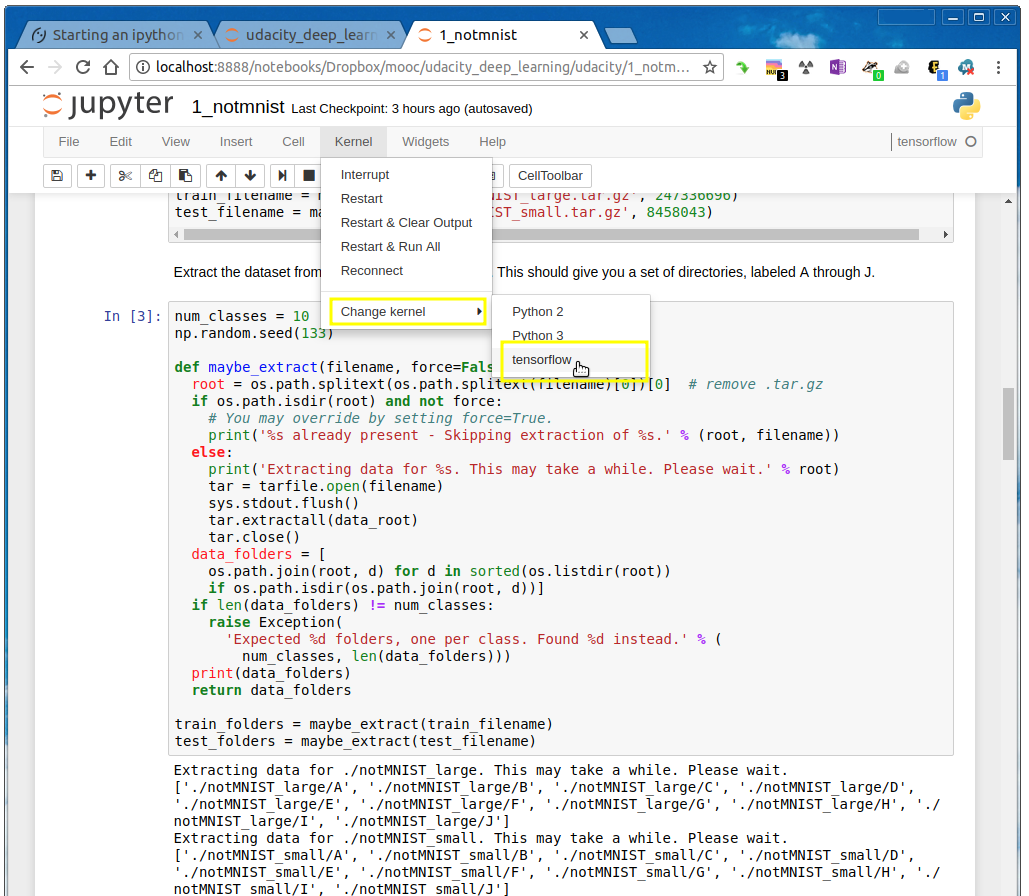

Finally, when you open your notebook you will have to change kernels from the default Python ones to the special tensorflow one.